The worst part of Python is its packaging system. The rest of this post is about the tools that I use to lower the friction of making packages for every thing. Being able to pick up where you left off, no matter how long ago you stopped working on something, pays dividends. You will end up wanting to train your model again at some point in the future or, more sadistic, you will leave the company and somebody else will have to train your model.

There will be follow-up questions based on your “final” analysis. This is not the last time that you will run that notebook. This might seem like overkill, but one thing I’ve learned about data science is that nothing is ever over. This ensures that future Ethan can run the notebook. py files, I will define a python package solely to store the python dependencies required for the notebook. Even if I am only creating a notebook for an analysis, and there are no actual python. I no longer use this cloud notebook development environment, but I still use this workflow. It’s nice when constraints incentivize best practices.

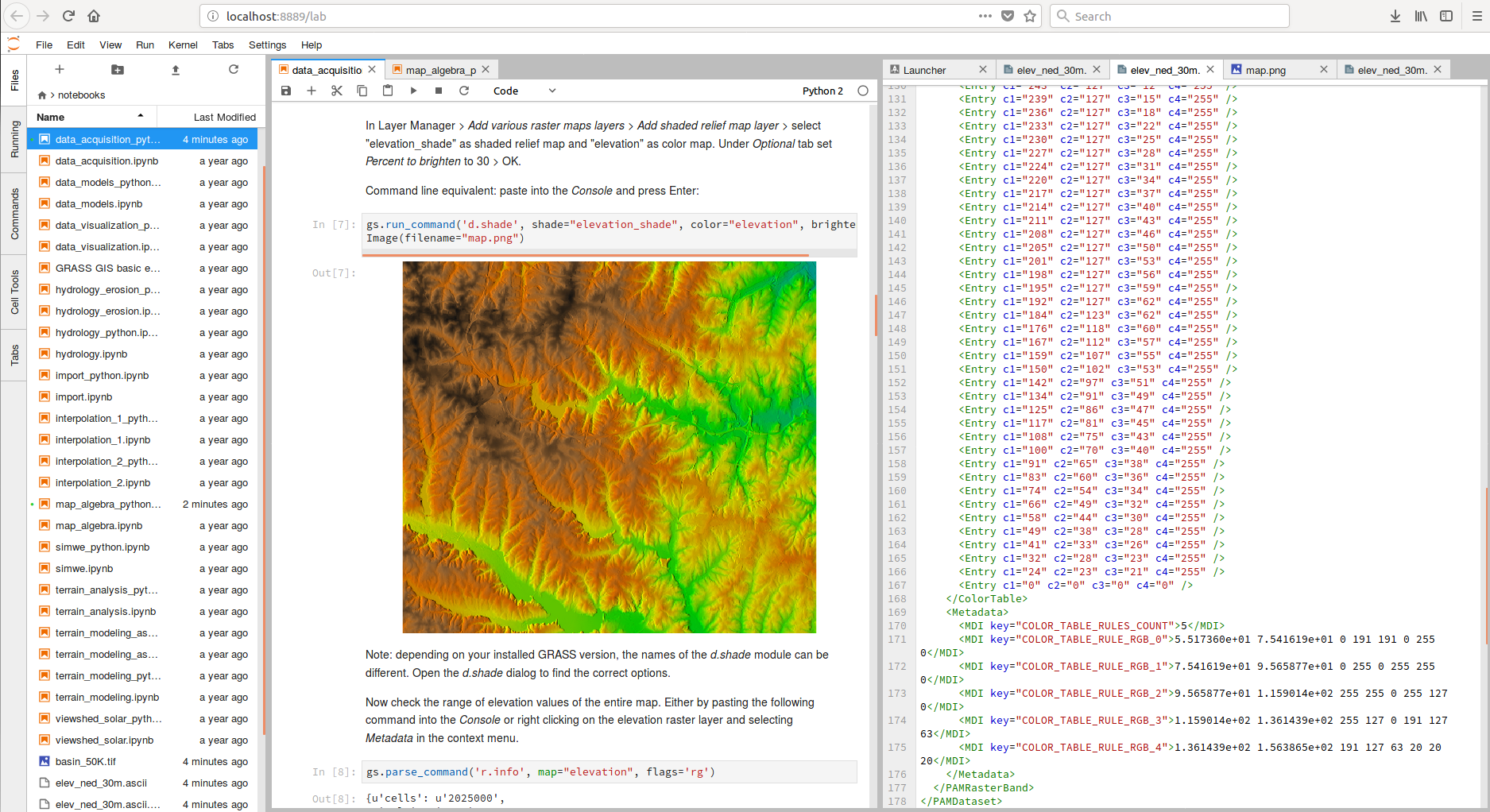

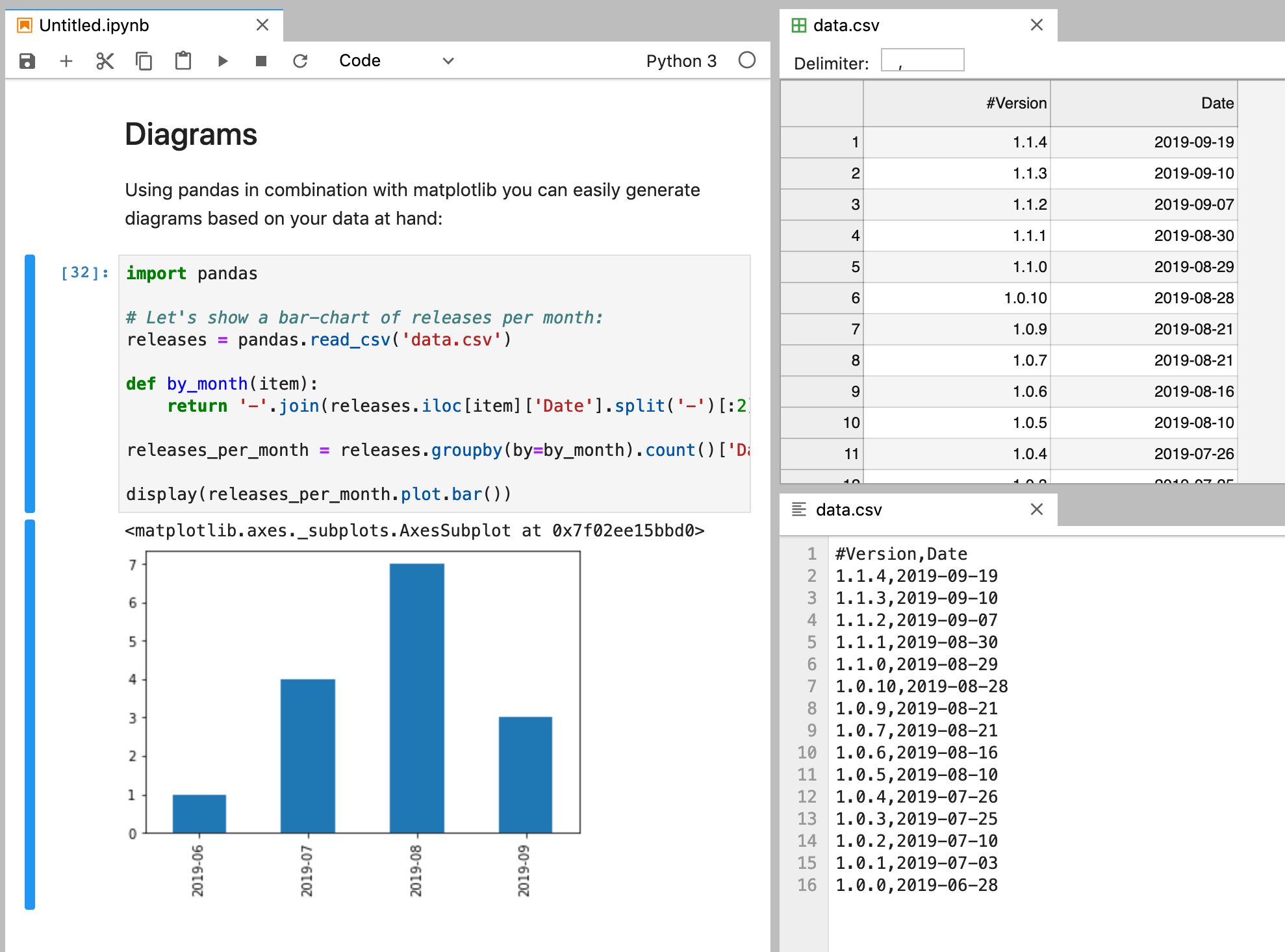

JUPYTERLAB DEPENDENCIES PORTABLE

It turn out, being forced to make your code portable is the same thing as requiring reproducibility in building and running your code. A python package for my code held all of the python dependencies. I used GitHub as the place to store my code and notebooks. Given this, as well as my desire to be able to launch parallel experiments in multiple containers, I was incentivized to make my workflow extremely portable. In particular, I would have to reinstall python packages each day that were not present in the original docker image. In engineering terms, not all of the “state was persisted”.

This is because I shut down the instance each day, and many things would get “reset”.

JUPYTERLAB DEPENDENCIES FREE

I was also free to spin up multiple instances and run code on each one.Ī result of this development environment was that I optimized my workflow to be as reproducible as possible. I had flexibility in picking the type of machine that the container was running on, so this allowed me to develop and run code on datasets that were too big for my local machine. I spent a year developing code almost exclusively within JupyterLab running on a docker container in the cloud. Notably, my workflow is set up to make it simple to stay consistent. Let’s back up a little bit so that I can tell you why I do this. Oh yeah, and every package gets its own virtual environment. Spinning up a quick jupyter notebook to check something out? Build a package first. Big projects obviously get a package, but so does every tiny analysis.

0 kommentar(er)

0 kommentar(er)